de.jstacs.algorithms.optimization

Class DifferentiableFunction

java.lang.Object

de.jstacs.algorithms.optimization.DifferentiableFunction

de.jstacs.algorithms.optimization.DifferentiableFunction

- All Implemented Interfaces:

- Function

- Direct Known Subclasses:

- LogPrior, NegativeDifferentiableFunction, NumericalDifferentiableFunction, OptimizableFunction

public abstract class DifferentiableFunction

- extends Object

- implements Function

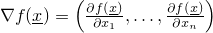

This class is the framework for any (at least) one time differentiable

function  .

.

- Author:

- Jens Keilwagen

|

Method Summary |

abstract double[] |

evaluateGradientOfFunction(double[] x)

Evaluates the gradient of a function at a certain vector (in mathematical

sense) x, i.e.,

. . |

protected double[] |

findOneDimensionalMin(double[] x,

double[] d,

double alpha_0,

double fAlpha_0,

double linEps,

double startDistance)

This method is used to find an approximation of an one-dimensional

subfunction. |

| Methods inherited from class java.lang.Object |

clone, equals, finalize, getClass, hashCode, notify, notifyAll, toString, wait, wait, wait |

DifferentiableFunction

public DifferentiableFunction()

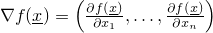

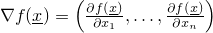

evaluateGradientOfFunction

public abstract double[] evaluateGradientOfFunction(double[] x)

throws DimensionException,

EvaluationException

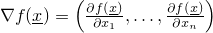

- Evaluates the gradient of a function at a certain vector (in mathematical

sense)

x, i.e.,

.

.

- Parameters:

x - the current vector

- Returns:

- the evaluation of the gradient of a function, has dimension

Function.getDimensionOfScope()

- Throws:

DimensionException - if dim(x) != n, with

EvaluationException - if there was something wrong during the evaluation of the

gradient- See Also:

Function.getDimensionOfScope()

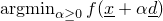

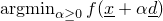

findOneDimensionalMin

protected double[] findOneDimensionalMin(double[] x,

double[] d,

double alpha_0,

double fAlpha_0,

double linEps,

double startDistance)

throws DimensionException,

EvaluationException

- This method is used to find an approximation of an one-dimensional

subfunction. That means it will find an approximation of the minimum

starting at point

x and search in direction

d,

.

This method is a standard implementation. You are enabled to overwrite

this method to be faster if you know anything about the problem or if you

just want to test other line search methods.

- Parameters:

x - the start pointd - the search directionalpha_0 - the initial alphafAlpha_0 - the initial function value (this value is known in most cases

and does not have to be computed again)linEps - the tolerance for stopping this methodstartDistance - the initial distance for bracketing the minimum

- Returns:

double[2] res = { alpha*, f(alpha*) }

- Throws:

DimensionException - if there is something wrong with the dimension

EvaluationException - if there was something wrong during the evaluation of the

function- See Also:

OneDimensionalFunction.findMin(double, double, double, double)

de.jstacs.algorithms.optimization.DifferentiableFunction

de.jstacs.algorithms.optimization.DifferentiableFunction

de.jstacs.algorithms.optimization.DifferentiableFunction

.

.

.

. .

.